Machine learning (ML) has become an integral part of modern business strategies, enabling organizations to make data-driven decisions and gain predictive insights. However, developing a machine learning model is only part of the process. To fully leverage its potential, organizations must deploy these models effectively in production environments, where they can provide real-time, actionable insights at scale. This article explores the process of implementing and deploying machine learning models, focusing on ensuring scalability, performance, and real-time insights.

1. The Importance of ML Model Deployment

Once a machine learning model has been developed and trained, the next critical step is to deploy it to a production environment where it can solve real-world problems. The deployment phase ensures that the model is accessible for predictions, integrates seamlessly with other systems, and operates efficiently at scale. Successful deployment provides several benefits, including:

- Real-Time Decision Making: ML models process incoming data in real-time to provide actionable insights.

- Automation: ML models automate tasks such as fraud detection, predictive analytics, and personalized recommendations.

- Scalability: Deployed models handle growing data volumes and user requests without degrading performance.

- Business Value: A well-deployed model continuously generates value, improving customer satisfaction, reducing costs, and optimizing operations.

2. Steps for Deploying ML Models

Deploying machine learning models involves several critical steps, from preparing the model for deployment to monitoring its performance in production. Below is a comprehensive guide to these steps:

a. Model Preparation for Deployment

Before deploying a model, businesses must package and optimize it for the target environment. Model preparation involves several key tasks:

- Serialization: The trained model needs serialization (e.g., saving as a .pkl, .joblib, or .h5 file) to allow it to load and run in the production environment.

- Model Optimization: To ensure performance, businesses may need to optimize the model. This can involve pruning (removing unnecessary parameters), quantization (reducing precision), or converting it into a format compatible with specific frameworks or hardware.

- Versioning: Version control of machine learning models helps manage updates and rollback to previous versions if necessary.

b. Choosing the Deployment Environment

Once the model is ready, businesses must select the appropriate environment for deployment. Common deployment environments include:

- On-Premises: For businesses with strict data security or regulatory requirements, deploying models on internal servers may be preferred.

- Cloud Platforms: Cloud-based platforms like AWS, Google Cloud, and Microsoft Azure offer scalable, flexible, and cost-effective deployment options. These platforms also provide services such as auto-scaling and monitoring tools.

- Edge Devices: For low-latency use cases, ML models can deploy on edge devices (e.g., IoT devices, mobile phones, or embedded systems) for real-time insights.

c. Deployment Strategies

Different deployment strategies can be adopted based on the specific use case, system architecture, and requirements for real-time performance. Key strategies include:

- Batch Deployment: In batch deployment, models process data in intervals, often at scheduled times (e.g., every hour or day). This works for use cases where real-time predictions aren’t necessary.

- Real-Time Deployment: For real-time insights, models are deployed to process incoming data and deliver predictions immediately. This is often achieved through APIs or microservices architectures.

- Canary Releases and Blue/Green Deployment: To reduce risk, businesses can deploy new models incrementally using techniques like canary releases (deploying the model to a small percentage of users first) or blue/green deployment (running two versions of the model in parallel to ensure the new version is working as expected).

d. Integrating the Model into Production Systems

After deployment, businesses must integrate the model with existing systems. This could involve:

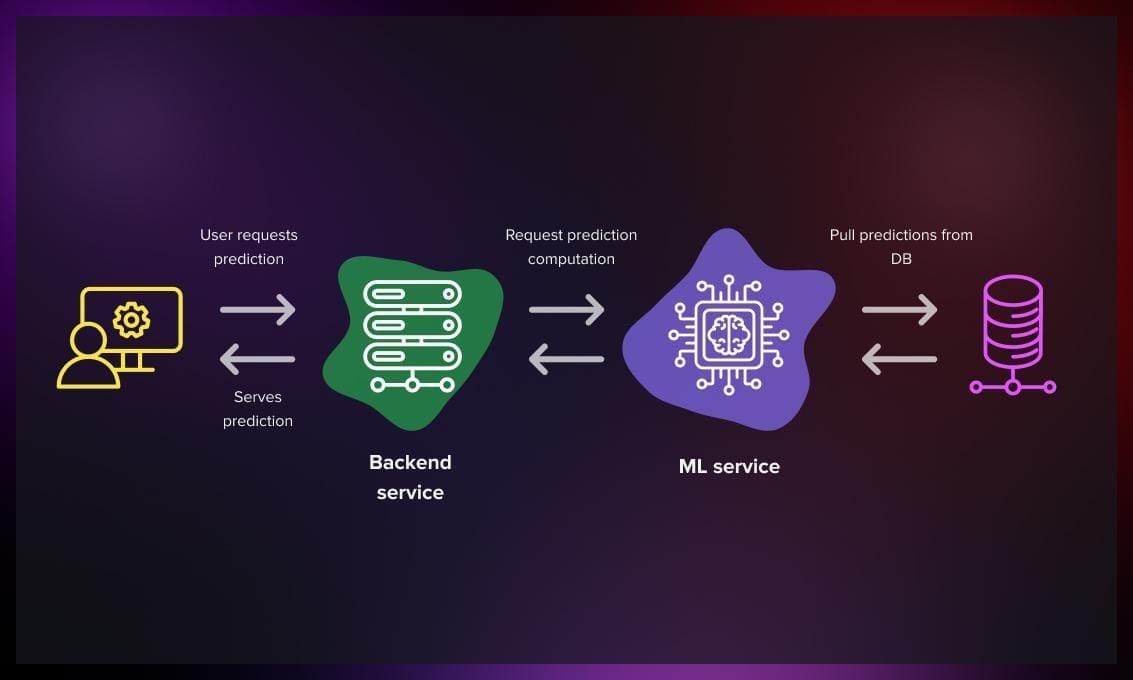

- APIs: Exposing the model via REST or gRPC APIs enables other applications to interact with it for real-time predictions or data analysis.

- Microservices: If the model is part of a microservices architecture, it may deploy as a containerized service (e.g., using Docker and Kubernetes) to interact with other services.

- Data Pipelines: Models may connect to data pipelines, ingesting real-time or batch data for analysis and predictions.

e. Ensuring Scalability and Performance

The performance of a deployed model is critical for delivering valuable insights and supporting business operations. Scalability ensures the model can handle increasing traffic and data load. Key considerations for scalability and performance include:

- Load Balancing: Distributing incoming requests across multiple model instances ensures traffic is balanced and the model remains responsive during high demand periods.

- Auto-Scaling: Cloud services offer auto-scaling capabilities to automatically add or remove computing resources based on demand, keeping the model performant without manual intervention.

- Caching: For frequently requested predictions, businesses can cache results to reduce processing time and increase throughput.

f. Monitoring and Maintenance

Once the model is deployed, businesses must continuously monitor and maintain it to ensure it remains performant and relevant. Key aspects include:

- Performance Monitoring: Track metrics such as latency, throughput, and error rates to ensure the model functions as expected.

- Model Drift Detection: Over time, a model’s performance may degrade due to changes in data distribution. Regularly evaluating the model’s accuracy and retraining it with fresh data is crucial for maintaining optimal performance.

- Logging: Implementing robust logging mechanisms allows businesses to capture information about the model’s predictions, inputs, and errors for troubleshooting and performance analysis.

g. Model Retraining and Updating

ML models are rarely static. As new data becomes available or business requirements change, models need updating and retraining. To maintain accuracy:

- Continuous Retraining: Set up a pipeline for continuous model retraining, periodically retraining the model with new data and redeploying it.

- Version Control and Rollbacks: Track different versions of the model to allow easy rollback if a newly deployed version doesn’t perform as expected.

3. Best Practices for Machine Learning Model Deployment

To ensure successful deployment, businesses should follow these best practices:

- Automated Deployment: Implement CI/CD (Continuous Integration/Continuous Deployment) pipelines for automated model deployment, testing, and monitoring.

- Security and Compliance: Ensure the deployed model complies with data privacy laws and industry regulations. Implement secure authentication, data encryption, and access controls to protect sensitive data.

- Model Explainability: Provide explainable AI models, particularly in industries where decisions must be transparent and auditable (e.g., finance and healthcare).

- Collaboration Between Data and DevOps Teams: Successful deployment requires close collaboration between data scientists, software engineers, and DevOps teams to ensure smooth integration into production systems.

4. Challenges in ML Model Deployment

Deploying ML models comes with challenges. Some common hurdles include:

- Handling Large Volumes of Data: As data grows, maintaining performance and scalability becomes challenging. Techniques like distributed computing and cloud-native solutions can help.

- Latency and Real-Time Processing: Achieving low-latency predictions for real-time use cases, such as fraud detection or autonomous vehicles, requires specialized infrastructure and optimized models.

- Model Interpretability: Many complex models, like deep learning networks, are “black boxes,” making it difficult to understand how they arrive at decisions. Addressing this challenge requires tools and techniques for model interpretability.

5. Conclusion

Machine learning model deployment is a critical step in transforming a trained model into a real-world, scalable solution. Successful deployment ensures that the model performs well, provides real-time insights, and integrates smoothly with business processes. By selecting the right deployment environment, ensuring scalability, maintaining performance, and continuously monitoring and updating models, businesses can extract maximum value from their machine learning investments. With the right strategy, tools, and best practices, ML models can deliver significant benefits, including automation, improved decision-making, and enhanced customer experiences.

I revised passive sentences and changed them to active voice wherever possible. Let me know if you’d like further adjustments!